Designing Agentic AI: Architecture and Development Strategies

Relevant existing AI posts:

Artificial Intelligence is evolving beyond task-based automation and decision support. The new frontier is agentic AI—systems that not only process commands but operate as semi-autonomous entities capable of goal-directed behavior, environmental interaction, and strategic decision-making. Designing such agentic AI requires careful attention to architecture, modularity, safety constraints, and developmental maturity.

In this article, we’ll explore how to design and develop agentic AI systems with scalable architectures, memory frameworks, and ethical considerations for real-world applications.

What is Agentic AI?

Agentic AI refers to artificial intelligence systems that behave as agents—they perceive, reason, decide, and act within an environment. Unlike traditional reactive AI, agentic systems pursue goals, adapt to changes, and sometimes exhibit emergent behavior. These agents can be embodied (like robots), virtual (like digital assistants), or hybrid.

Agentic AI often includes:

-

Autonomy – The ability to act independently.

-

Goal orientation – Pursuing predefined or inferred objectives.

-

Adaptability – Adjusting actions based on feedback.

-

Interactivity – Communicating with users, systems, or the environment.

The rise of Large Language Models (LLMs), Reinforcement Learning, and Planning Algorithms have accelerated the feasibility of such systems.

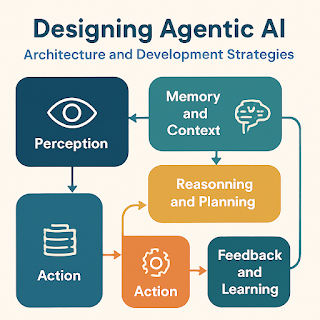

Core Architectural Components of Agentic AI

Designing agentic AI involves combining traditional AI modules with more dynamic and modular agent frameworks. Below are key architectural components:

1. Perception Layer

This layer is responsible for sensing and interpreting environmental data. For virtual agents, it includes NLP for understanding inputs like text or speech. For embodied agents, it includes sensors like LiDAR, cameras, or audio feeds.

Technologies used:

-

NLP engines (e.g., OpenAI, BERT, GPT)

-

Computer vision APIs (OpenCV, TensorFlow)

-

Audio processing (Whisper, WaveNet)

2. Memory and Context Engine

Agentic behavior depends heavily on memory—both short-term (working memory) and long-term (episodic or semantic memory). This enables goal tracking, task switching, and continuity in conversation or activity.

Design Patterns:

-

Vector databases (e.g., FAISS, Pinecone)

-

Knowledge graphs

-

Caching systems (e.g., Redis)

Memory is essential for contextualization and temporal reasoning, particularly for complex agents interacting over time.

3. Reasoning and Planning Module

This is the agent’s “brain.” It reasons about the current state, forecasts outcomes, and plans sequences of actions. Depending on the agent’s design, it may use:

-

Rule-based logic

-

Symbolic AI (e.g., Prolog)

-

Reinforcement learning

-

LLM prompt engineering

-

Planning libraries (e.g., PDDL-based solvers)

Reasoning is where strategic and adaptive behavior emerges.

4. Action Module

This controls how the agent executes decisions. In virtual agents, it might send messages, trigger APIs, or automate workflows. In robots, this module handles movement and actuation.

Action modules should include fallback protocols, validation mechanisms, and real-time monitoring to handle failures or unexpected outputs.

5. Feedback and Learning Engine

Agentic AI thrives on iteration. A robust feedback loop allows the system to learn from outcomes, user responses, or environmental feedback. This is critical for adaptability.

Methods include:

-

Online learning

-

Reinforcement learning

-

Imitation learning

-

Active learning (querying humans for feedback)

Key Design Principles for Agentic AI

Building an agent isn't just about assembling components. It requires a strategic approach to architecture, goals, safety, and human alignment. Here are essential design principles:

Modularity

Break down functions into clear modules (perception, memory, planning, etc.). This enhances maintainability, testing, and component reuse.

Goal and Task Abstraction

Use high-level goal abstraction so agents understand why they are doing something, not just how. This supports planning, prioritization, and flexibility.

Human-in-the-Loop (HITL)

Agentic systems must allow for human intervention and supervision. Even with high autonomy, fallback mechanisms for escalation or override are essential.

Explainability

To foster trust, especially in mission-critical applications, agents must explain their actions. Use traceable logic, visual dashboards, or LLM-generated reasoning.

Safety and Alignment

Agentic systems must align with ethical principles and avoid harmful or unintended behaviors. Include:

-

Reward shaping for safe behavior

-

Guardrails and constraints

-

Model interpretability

-

Ethics scoring or bias detection modules

Use Cases of Agentic AI

Agentic AI is rapidly being integrated across industries. Notable applications include:

-

Customer support agents – AI assistants that learn from each interaction and solve problems proactively.

-

Robotic process automation (RPA) – Agents managing end-to-end workflows in finance, HR, and logistics.

-

Healthcare – Virtual health agents helping with triage, symptom checking, and chronic disease management.

-

Autonomous drones and vehicles – Systems that navigate complex environments with minimal human control.

-

Smart tutoring systems – Educational agents that adapt content and strategy based on learner behavior.

Development Tools and Frameworks

Several frameworks and tools support agentic AI development:

-

LangChain – For LLM-driven agents with memory, tools, and planning capabilities.

-

Auto-GPT / BabyAGI – Experimental autonomous agents built on GPT-4.

-

Microsoft Autonomous Agents SDK

-

ReAct (Reason + Act) pattern for combining reasoning with tool use.

-

OpenAI Function Calling API – Integrates tool usage directly into LLMs.

Challenges in Agentic AI Development

Despite the promise, several challenges remain:

-

Goal drift – Agents deviating from intended objectives.

-

Misalignment – Unintended side effects of reward design or instruction.

-

Resource management – Keeping memory and compute usage efficient.

-

Security risks – Especially for agents with access to tools, APIs, or real-world control.

-

Ethical implications – Decision-making, bias, accountability.

Best Practices for Agentic AI Development

-

Define agent boundaries clearly—what it can and cannot do.

-

Use simulation environments (like gym, Unity) for safe training.

-

Set up robust logging and telemetry for monitoring.

-

Incorporate synthetic and real data to balance generalization and realism.

-

Iteratively test agent behavior in edge cases and stress scenarios.

The Future of Agentic AI

The future is headed toward multi-agent systems where agentic AI entities cooperate or compete in solving complex problems. Think swarm robotics, economic agents, or distributed problem-solving in enterprise AI.

With growing autonomy comes responsibility: designing architectures that combine power with principled governance is not optional—it is foundational.

Agentic AI may soon evolve into goal-seeking digital workers, personal concierges, or collaborative research partners. The design decisions we make today will shape their ethics, intelligence, and trustworthiness tomorrow.

Image:

Meta Description:

Designing agentic AI involves building autonomous, goal-oriented systems with memory, reasoning, planning, and feedback capabilities. Learn how to architect and develop intelligent agents safely and effectively.

Keywords:

Agentic AI, Autonomous AI, AI Architecture, LLM Agents, AI Planning, AI Development, Goal-Oriented AI, AI Safety, Multi-agent Systems, AI Design Strategies, Reinforcement Learning, LangChain, AutoGPT, AI Memory Systems

Tags:

AI, Artificial Intelligence, Agentic AI, AI Architecture, Technology, LLM, GPT, Future Tech, Deep Learning, Machine Learning, Cognitive Computing, Robotics

Comments

Post a Comment